Greetings :-)

Greetings :-)I'm Peter Billam ; Website is pjb.com.au

I have a particular place in the ecology of Open Source Development,

sort of out on the Lone Wolf fringe.

See also the video on youtube:

www.youtube.com/watch?v=GnNdxzrt_jc

and a slightly edited version:

MuchMIDIstuff.mp4

(warning: Firefox may fail when showing this file...)

Greetings :-)

Greetings :-)

I'm Peter Billam ;

Website is pjb.com.au

I have a particular place in the ecology of Open Source Development,

sort of out on the Lone Wolf fringe.

I write things because I need them,

and I write them by myself because they're small enough.

Then I use them because I need them,

and I maintain them because I use them.

This has advantages and disadvantages;

It gives great agility and flexibility;

for example

I can decide when it's appropriate to add features

or break backward-compatibility

without having to wade through any net-political process.

On the other hand, it limits size and complexity;

it also constrains longevity,

since when I stop paying the bills

it's not guaranteed that anyone will take it over,

and if they do, the code and its background will be unfamiliar to them.

MIDI is a standard agreed on in 1983 by

an association of manufacturers,

who probably never dreamed Open Source programmers might write for it.

The standard offers 16 channels, each of which:

MIDI is a standard agreed on in 1983 by

an association of manufacturers,

who probably never dreamed Open Source programmers might write for it.

The standard offers 16 channels, each of which:

MIDI data exists in two forms:

Firstly, in real-time : so when I press a note on my MIDI-keyboard,

a note-on event gets transmitted down the wire.

It's in Real-Time; no timing-information is necessary,

the synthesiser has to perform that event Now.

Of course, in fact there's no such thing as "now",

because of the speed of light,

not to mention the speed of sound,

so there will be some latency.

About 10 milliseconds latency is acceptable.

Of the linux synths, TiMidity doesn't meet this;

fluidsynth maybe just meets it, on a fast CPU . . .

Secondly, stored in midi files, usual extension .mid :

these files have to include the timing information.

Each MIDI-event has a delta-time, measured in ticks;

a tick is defined in beats,

and a beat is defined in microseconds.

I mostly use 1 tick = 1 milliseconds.

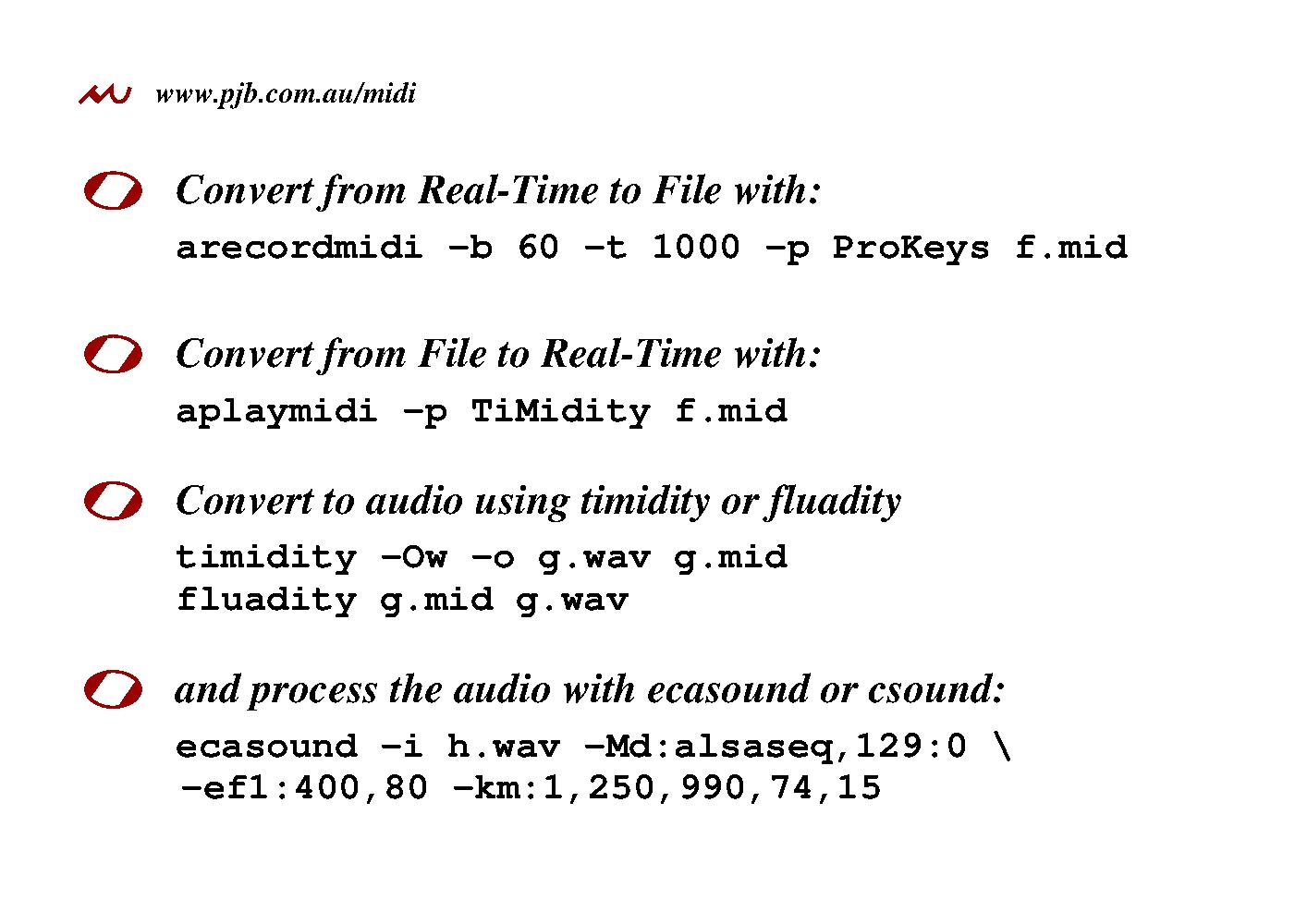

Because MIDI exists in Real-Time and in File form,

you have to be able to convert between them.

Because MIDI exists in Real-Time and in File form,

you have to be able to convert between them.

Converting from Real-Time to File is called recording,

and in linux that's done with arecordmidi.

This example sets 60 beats to the minute, and 1000 ticks to the beat,

giving me my default millisecond-tick.

It records from the ALSA-Port ProKeys,

which is my ProKeys 88 MIDI-keyboard.

Converting from File to Real-Time is called playing,

and in linux that's done with aplaymidi.

This example plays to the TiMidity software synth.

Once it's recorded into a MIDI-file, you can convert into audio format, such as .wav. Here software synths are particularly useful; these examples use timidity and my fluadity

I won't spend any time on the last example, but it shows how MIDI controllers can also be used to control audio processing, using ecasound

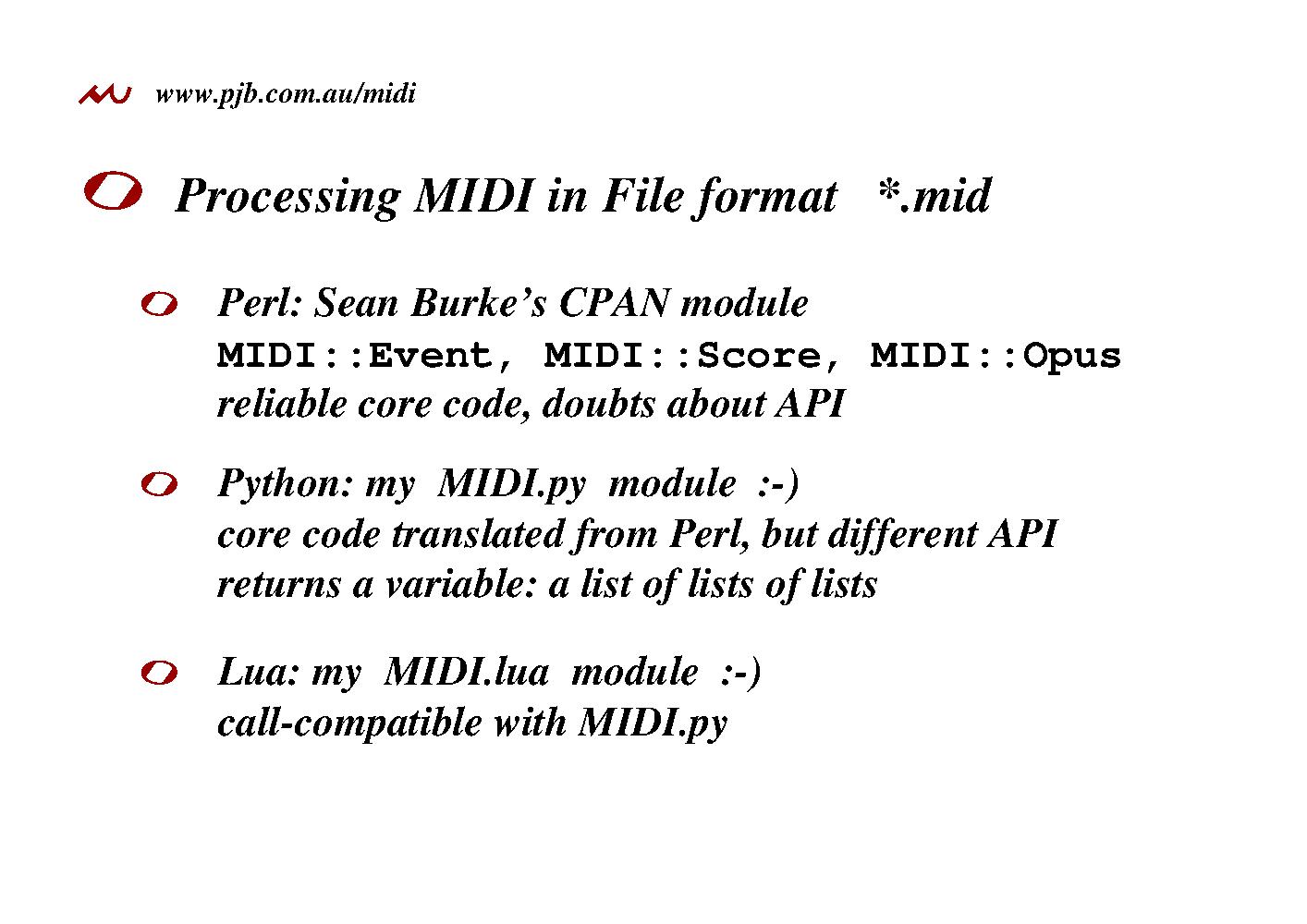

I program in Perl, Python and Lua,

and there are modules to manipulate MIDI files.

I program in Perl, Python and Lua,

and there are modules to manipulate MIDI files.

They descend from the Sean Burke's CPAN module,

which has been running for decades and never gets anything wrong;

but I find the API a bit cumbersome.

I translated the core code into Python, to get

MIDI.py,

but simplified and fleshed-out the API

to to make it as useful to me as possible.

It's not in pypi because of a naming-conflict (up to case);

you have to get it from www.pjb.com.au.

This I then translated into Lua, to get

MIDI.lua,

which is very API-compatible, and is

available as a Luarock.

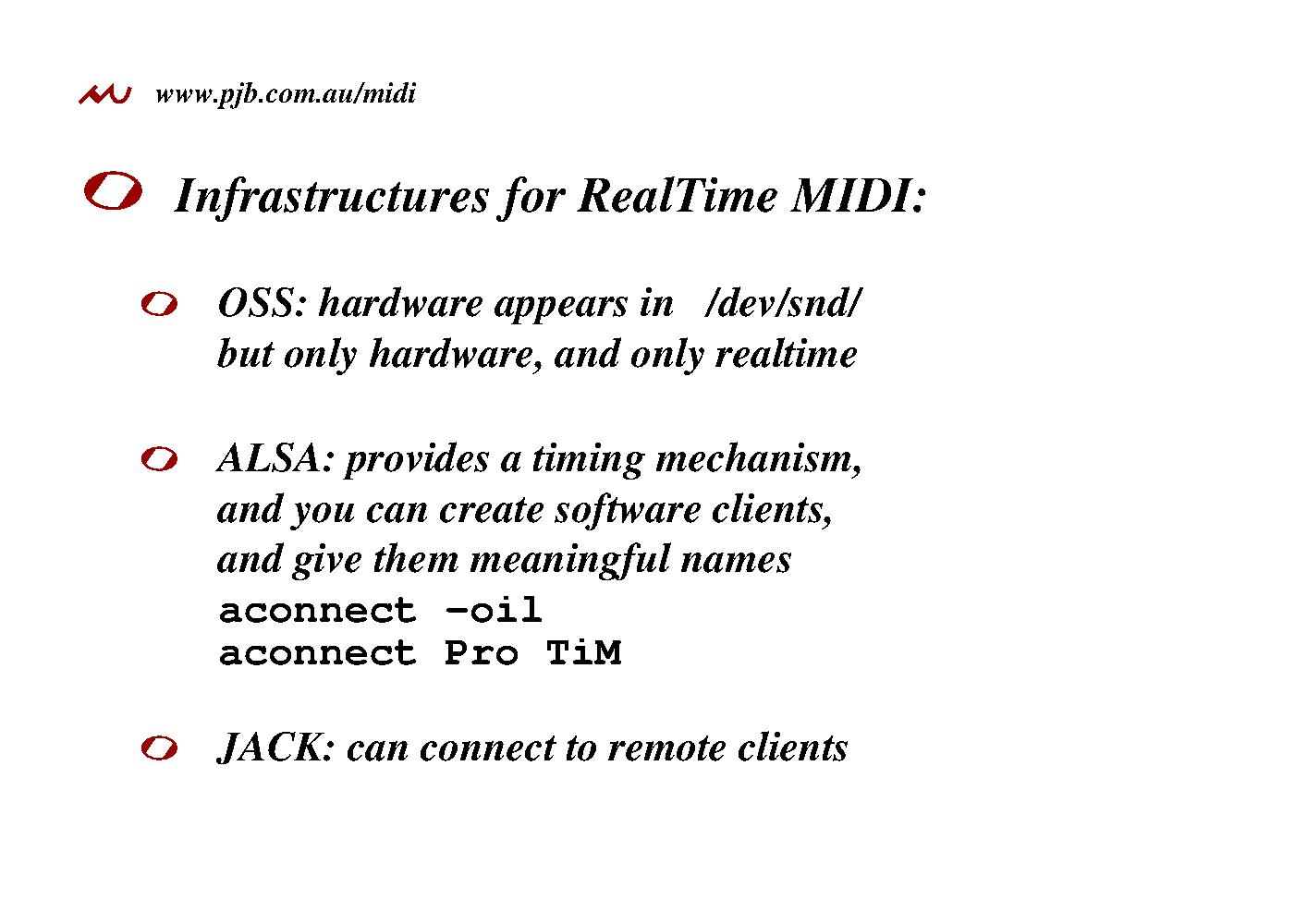

Linux has been through several generations of Real-Time MIDI.

Linux has been through several generations of Real-Time MIDI.

The first was OSS, which just links the MIDI hardware to a

device in /dev/snd/.

That's all. It only handles hardware clients,

and it provides no timing-code,

everything's Real-Time so every application has to write its own timing-code.

The device-filenames bear no relation to the device they represent.

ALSA-MIDI was a huge step forward. The clients have sensible names, you can create your own software clients, and it provides timing code and queueing, so you can give it events whenever's convenient and it will transmit them at the right moment. It's easy to see what's there and to connect and disconnect one client to another. On a single host, there's nothing more you could want, and because I work on one host, ALSA-MIDI is all I ever use.

JACK-MIDI then allows you also to connect a client on one host to a client on another, with very low latency; and if you're running a whole studio using several boxes, JACK-MIDI is what you need.

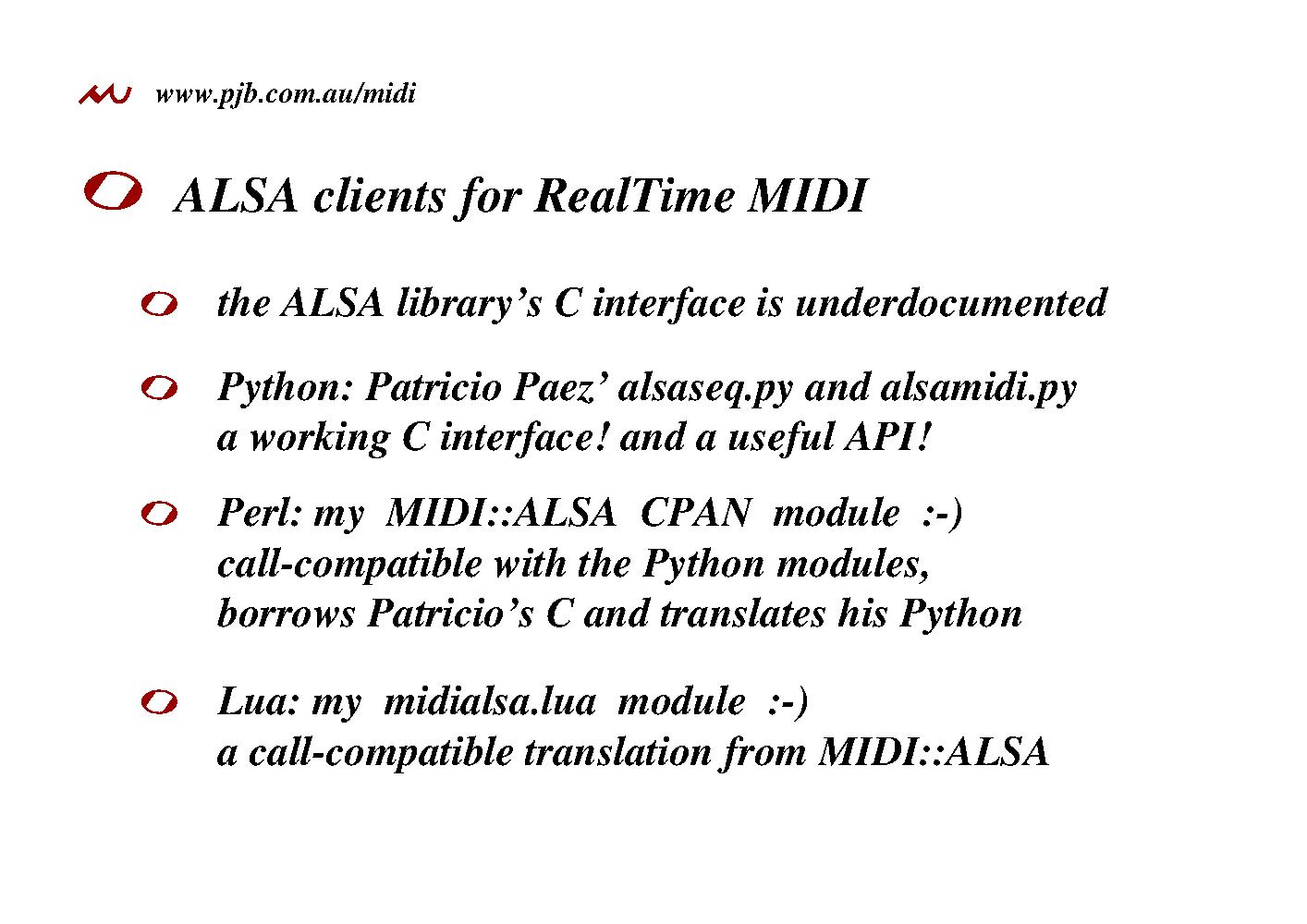

The ALSA-MIDI C interface is confusing, and for years I

was longing for a high-level-language module for Real-Time MIDI.

The log-jam was broken when I discovered Patricio Paez'

alsaseq

module, which had working C-code and pretty good API.

The ALSA-MIDI C interface is confusing, and for years I

was longing for a high-level-language module for Real-Time MIDI.

The log-jam was broken when I discovered Patricio Paez'

alsaseq

module, which had working C-code and pretty good API.

So I translated this into my MIDI::ALSA CPAN module, which is almost completely API-compatible, which has one extra function and a couple of bug-fixes.

I then translated that into my midialsa.lua module, which is totally API-compatible with the CPAN module, and is available as a Luarock.

But there's more to life than infrastructure, and I wrote these modules because I wanted to write apps using them, so now it's time to look at the website . . .

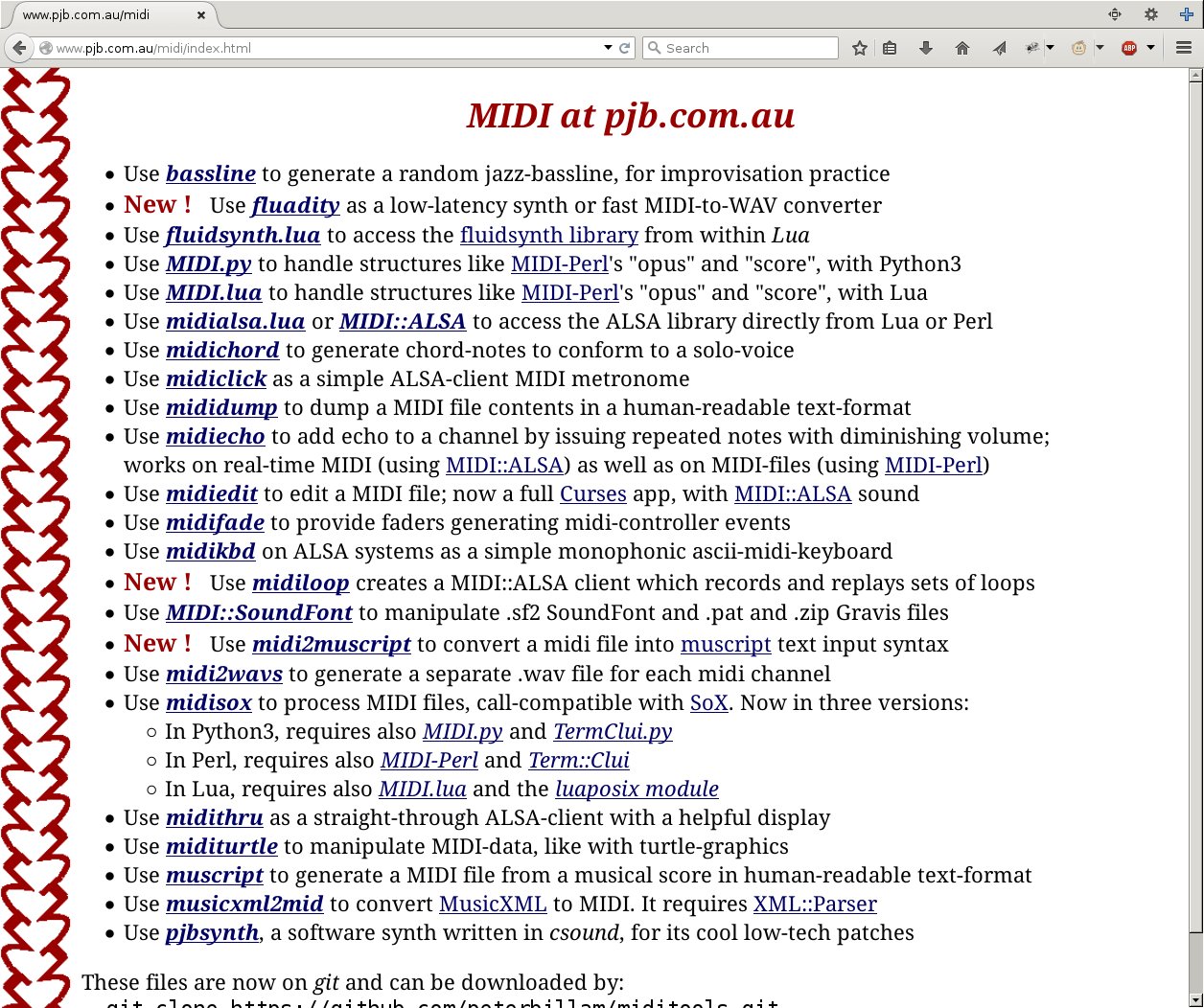

This is the front page; all the top section is my music,

This is the front page; all the top section is my music,

which is not relevant here, but do check it out if you can read music;

and if you have friends that can read music,

let them know about it too.

The middle section has two links; muscript is my music-typesetting software, which lies between lilypond and abc; it has most of the flexibility of lilypond, and most of the simplicity of abc. It's all I ever use, and I use it all day every day. Check out the changes.

The link underneath that takes you to

www.pjb.com.au/midi . .

The link underneath that takes you to

www.pjb.com.au/midi . .

I use all of this stuff pretty much every day, and if there's ever any bugs, I'm the first to notice.

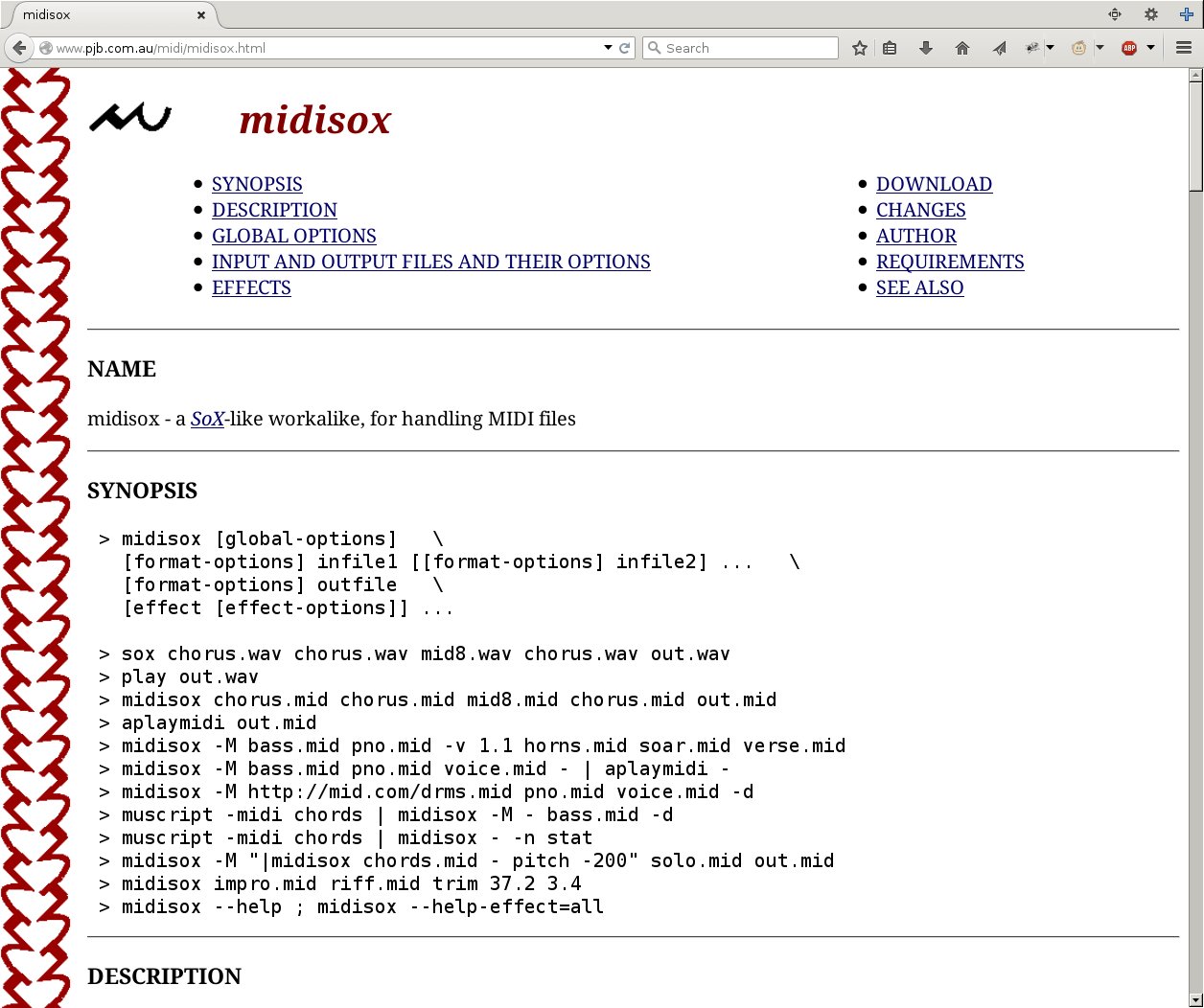

Today I'm going to talk about

midisox and midiedit,

which manipulate MIDI-files,

then about midiloop and midichord,

which manipulate Real-Time MIDI.

If there's time, we'll do a little demo of midiedit, and of midiloop.

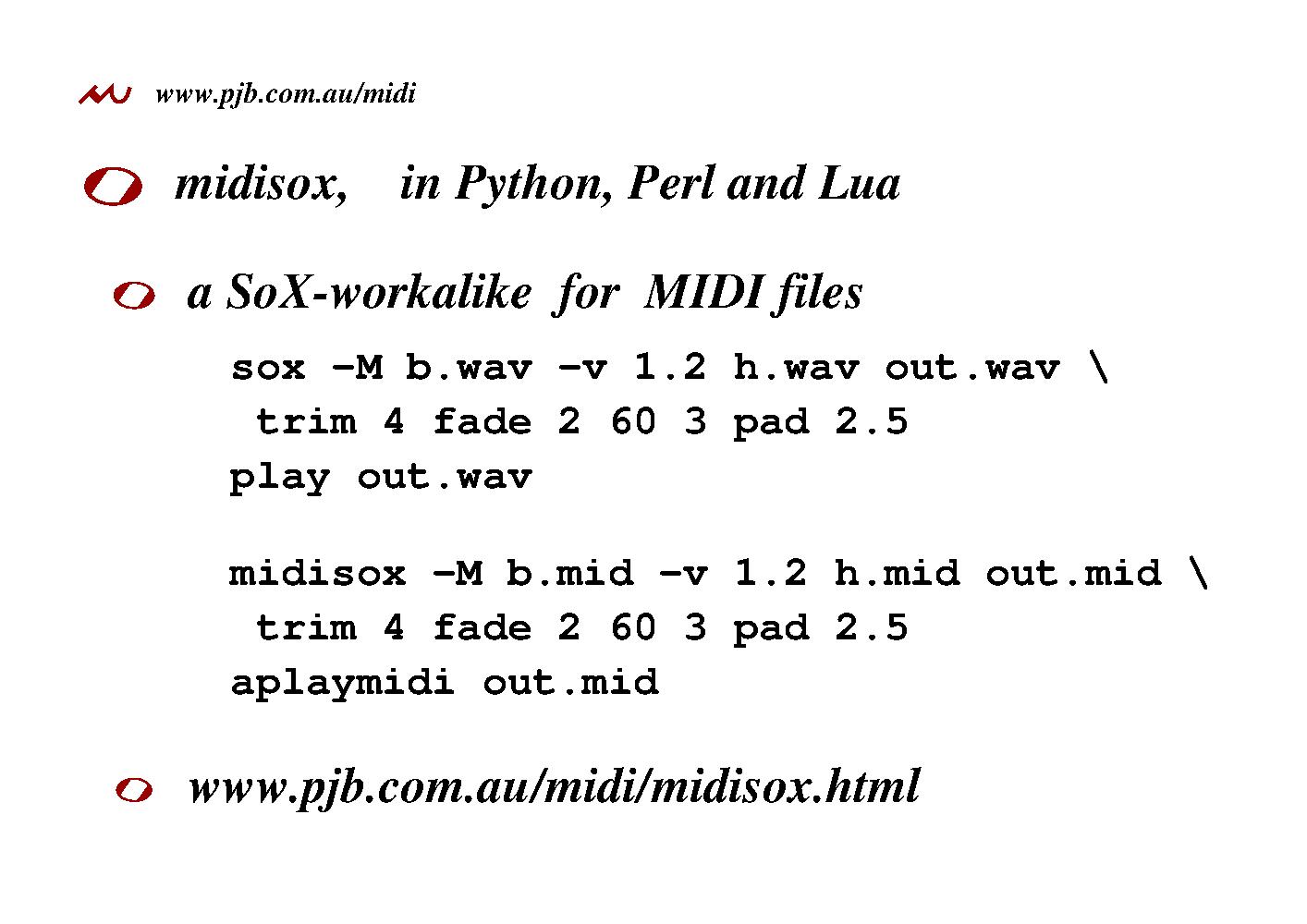

Sox, you may well know, is well-established tool for processing

audio files. It does things like mix them or concatenate them,

change the tempo or the key; it does tone controls, compresser;

it can pad the beginning or end with silence, trim bits off,

fade in and fade out and many other effects.

Sox, you may well know, is well-established tool for processing

audio files. It does things like mix them or concatenate them,

change the tempo or the key; it does tone controls, compresser;

it can pad the beginning or end with silence, trim bits off,

fade in and fade out and many other effects.

It can do other stuff too, but all those examples are also relevant

to MIDI-files. So I wrote midisox, to be, as far as possible,

command-line-compatible with Sox.

In the first example, sox merges (-M) the recordings of the bass and the horns, b.wav and h.wav, increasing the volume of the horns by a factor of 1.2, and puts the result in out.wav, after it trims off the first four seconds, then fades in during two seconds, and with a total length of 60, fades out during the last three of those, then pads the beginning with two-and-a-half seconds of silence. You can then play out.wav with the play program.

The second example is the midisox equivalent: it merges b.mid

and h.mid, increasing the volume of the horns, and puts the result

into out.mid, after having trimmed off the first four seconds,

faded in and faded out, and padded the beginning, just like Sox.

Amazingly, without midisox, there was no good way of doing that stuff.

You can then play out.mid with the aplaymidi program.

The second example is the midisox equivalent: it merges b.mid

and h.mid, increasing the volume of the horns, and puts the result

into out.mid, after having trimmed off the first four seconds,

faded in and faded out, and padded the beginning, just like Sox.

Amazingly, without midisox, there was no good way of doing that stuff.

You can then play out.mid with the aplaymidi program.

Midisox was first written in Python to road-test

my MIDI.py module,

so then I translated it into Lua to road-test

my MIDI.lua module,

and then I translated it into Perl to test some code which wrapped the

MIDI-Perl API.

I maintain it in all three languages, and they all work.

I have to say that the Lua version runs about twice as fast as

the Perl and Python versions.

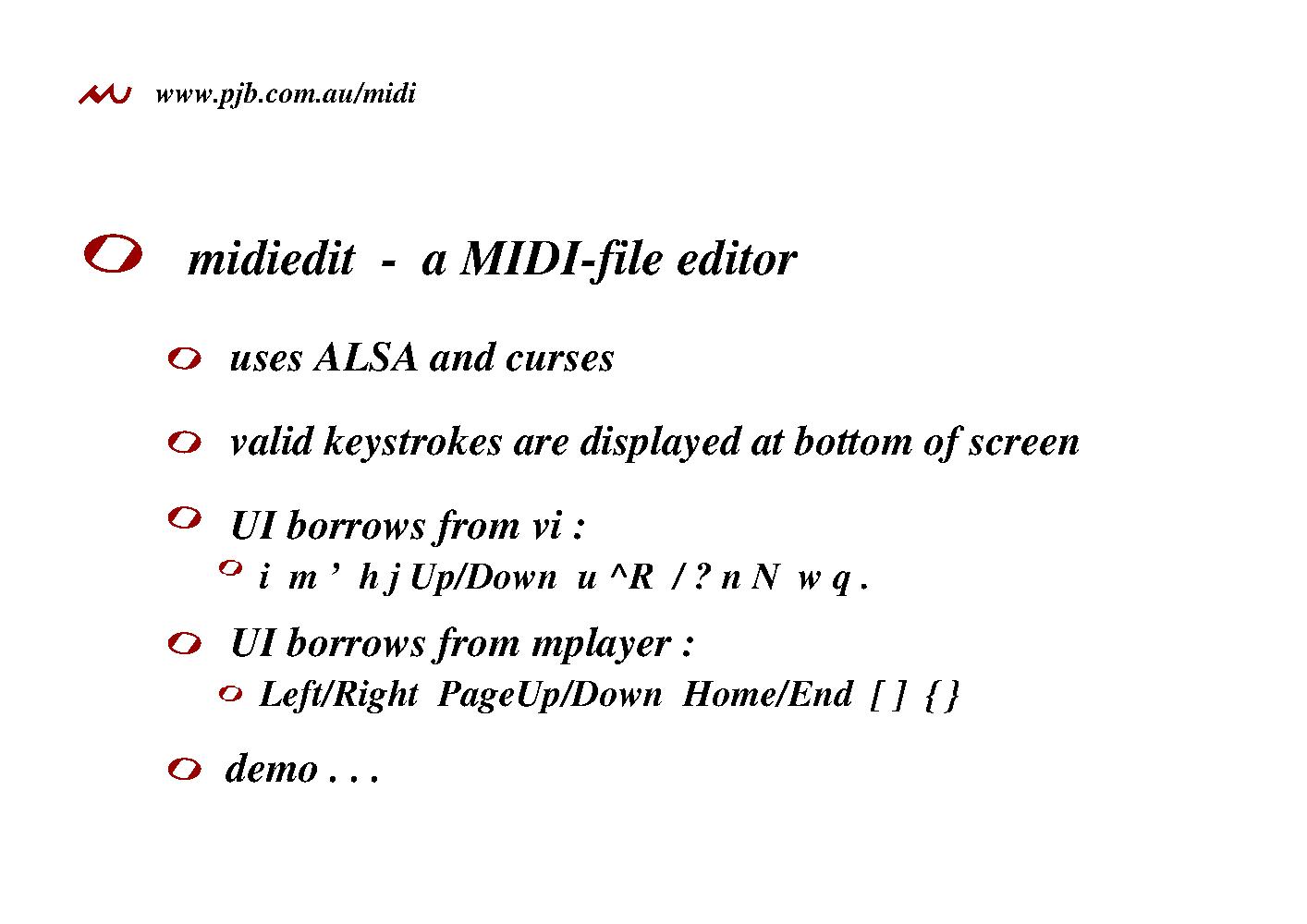

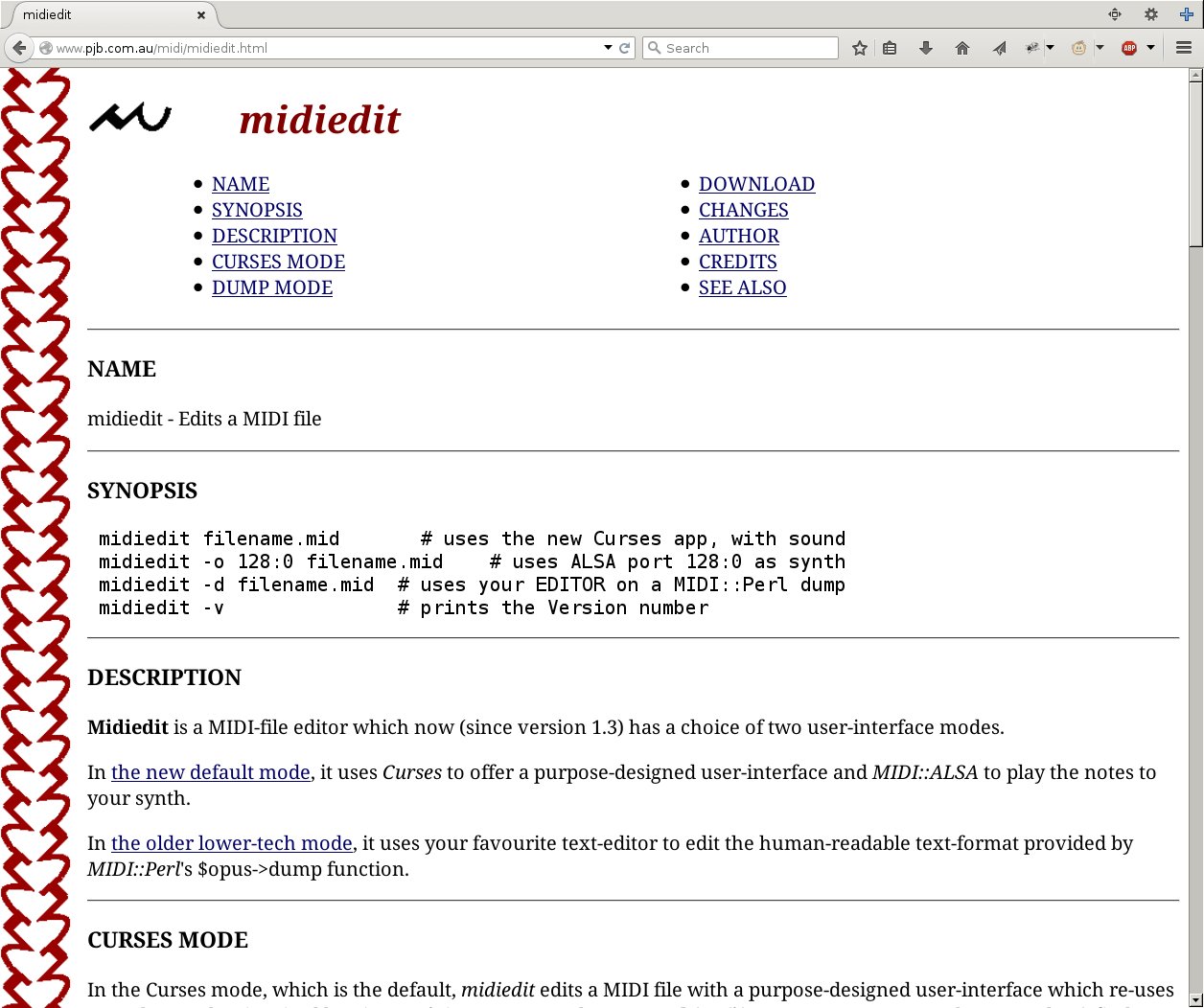

Before

midiedit,

the state-the-art in editing MIDI files was to

dump the data in some ascii format,

edit the ascii with Your Favourite Text Editor, then reconvert.

Before

midiedit,

the state-the-art in editing MIDI files was to

dump the data in some ascii format,

edit the ascii with Your Favourite Text Editor, then reconvert.

Midiedit still offers that, with the -d option:

midiedit -d foo.mid

But the default is now a self-documenting curses app

where the UI borrows keystrokes from mplayer

(including Left/Right PageUp/Down Home/End [ ] { } and so on),

and keystrokes from vi (including Up/Down,

mark and goto, undo and redo, find, repeat and so on).

But the default is now a self-documenting curses app

where the UI borrows keystrokes from mplayer

(including Left/Right PageUp/Down Home/End [ ] { } and so on),

and keystrokes from vi (including Up/Down,

mark and goto, undo and redo, find, repeat and so on).

As well as handling the MIDI-file, it also uses

MIDI::ALSA

to play the notes in Real-Time as you edit them.

There should be time for a little demo,

midiedit

Man_Machine_Interface_2.mid

covering:

moving:

Up Down

Left Right

PageUp PageDown

Home End

/ ? n N

playing:

Space

[ ]

editing:

D

u ^R

e

.

i

R rd

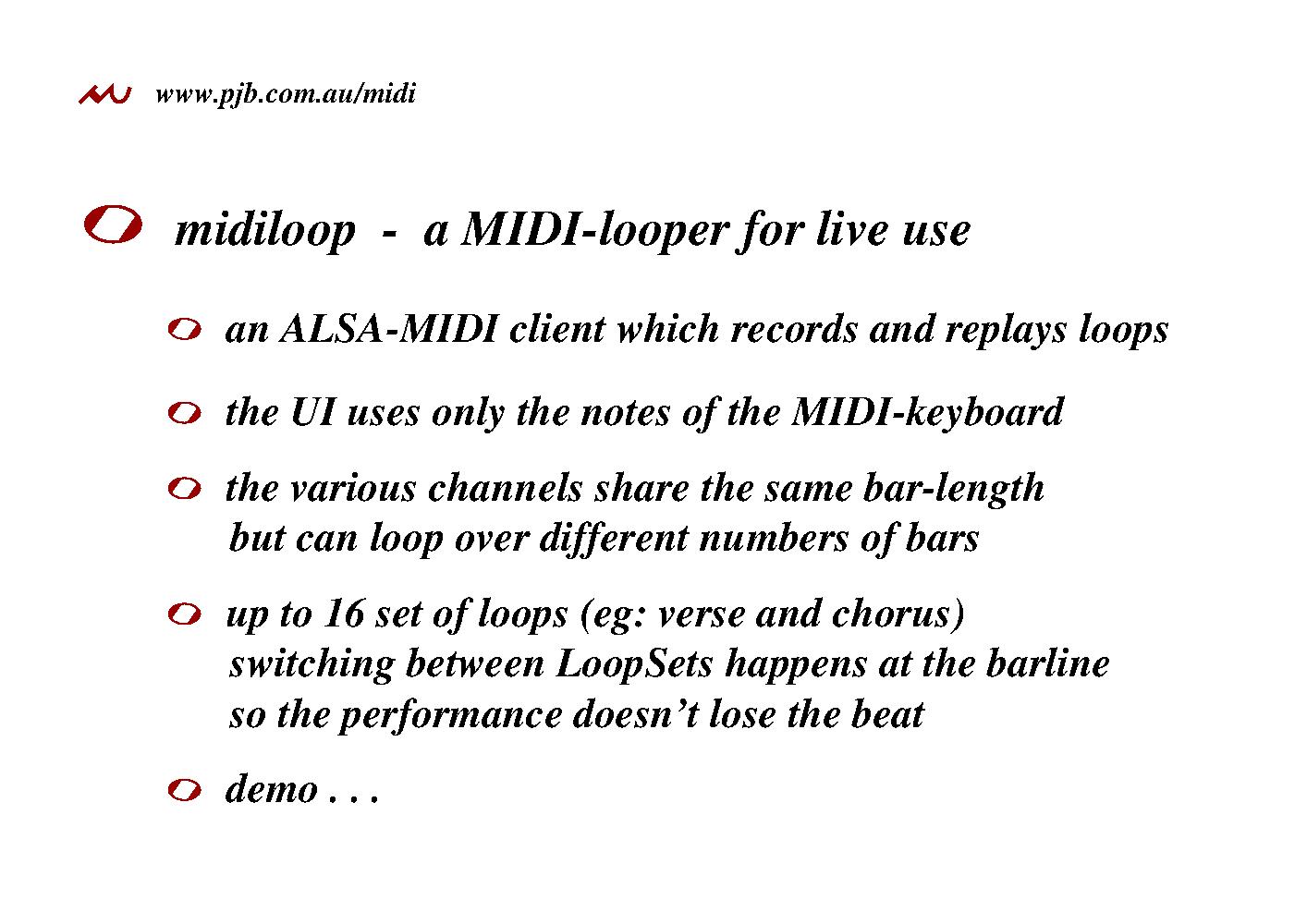

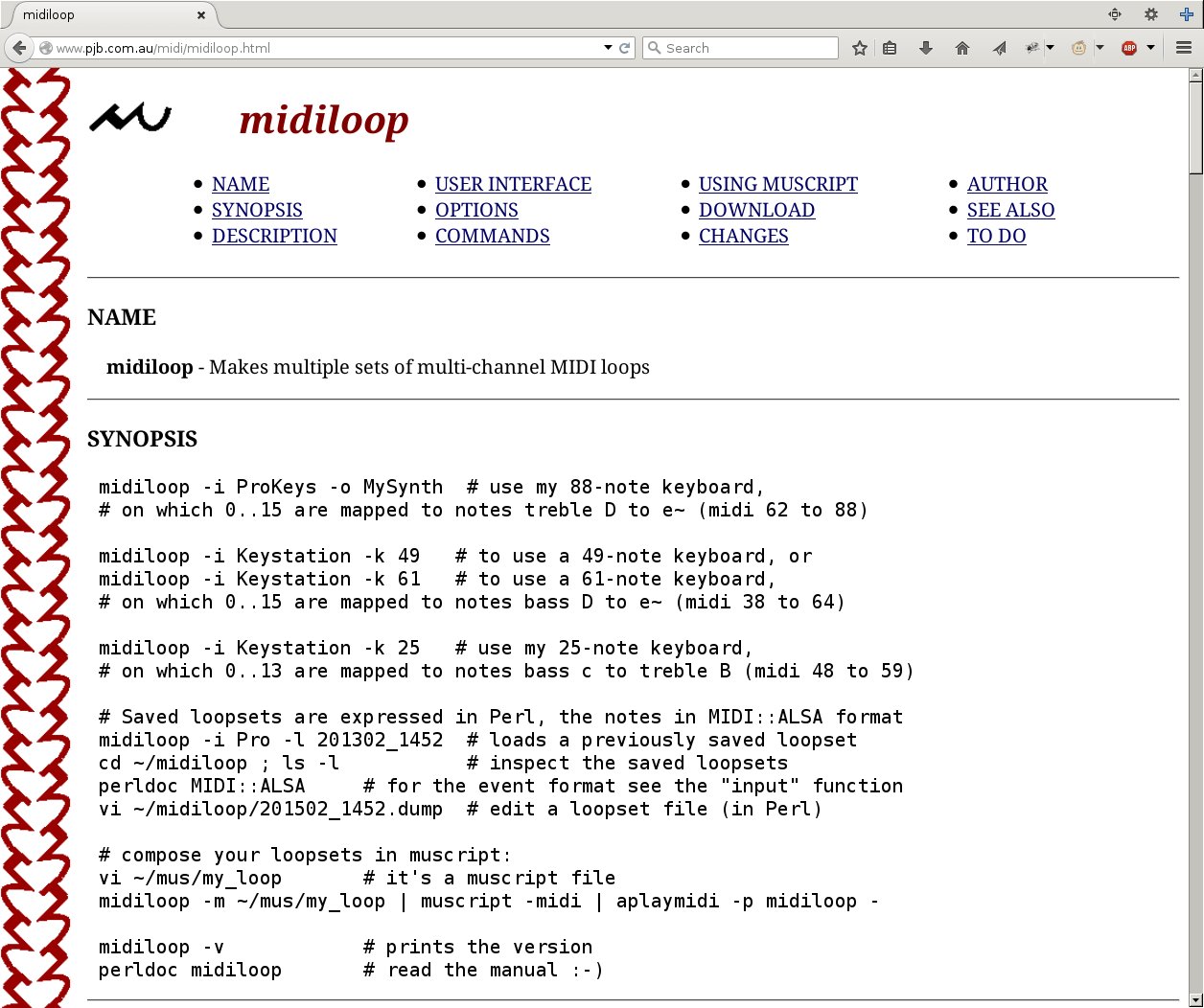

midiloop creates an ALSA-MIDI client, and records and replays sets

of loops. There can be up to sixteen LoopSets. Each LoopSet can have

up to sixteen midi-channels, each channel can be muted or unmuted.

midiloop creates an ALSA-MIDI client, and records and replays sets

of loops. There can be up to sixteen LoopSets. Each LoopSet can have

up to sixteen midi-channels, each channel can be muted or unmuted.

All the user-interface is driven by the keys

of the MIDI-keyboard (not the computer-keyboard).

This gives fast operation in a performance situation.

The UI needs the computer-screen to be visible by the performer,

because that tells you the current status and what your options are.

The top note of the keyboard switches you into and out of command-mode.

All other data is entered using the numbers 0..15, which are mapped

onto some of the white notes.

The top note of the keyboard switches you into and out of command-mode.

All other data is entered using the numbers 0..15, which are mapped

onto some of the white notes.

Each LoopSet has a basic barlength in seconds, but each channel within that LoopSet can loop at an integer multiple (1 to 15) of that basic barlength. For example, the drums could loop at 1.8 seconds, but the bass could play a 3.6-second riff, and the organ could play a 7.2-second sequence.

All changes in what's being played (Play/Pause, Mute, Unmute, GotoMuting,

GotoLoopset) take place not immediately, but at the next barline.

This means a midiloop performance never loses the beat.

There should be time for a little demo :

midiloop -i Keystation

channel 1 patch 4 , channel 0 patch 35

topnote 9=NewLoopset

1=Barlength=1.04sec

topnote 3=RecordChannel

2=Loop=2bars 1=WithMetronome

play... topnote

channel 1

topnote 3=RecordChannel

4=Loop=4bars 1=WithMetronome

play... topnote

topnote 1=Mute

0=Channel 1

topnote 0=Pause

topnote 11=SaveLoopsets

topnote 12=LoadLoopsets

1=menu-choice=agbadza_dance

topnote 0=Play

topnote 13=Quit

1=OK

cd ~/midiloop ; vi `ls -t | head -1`

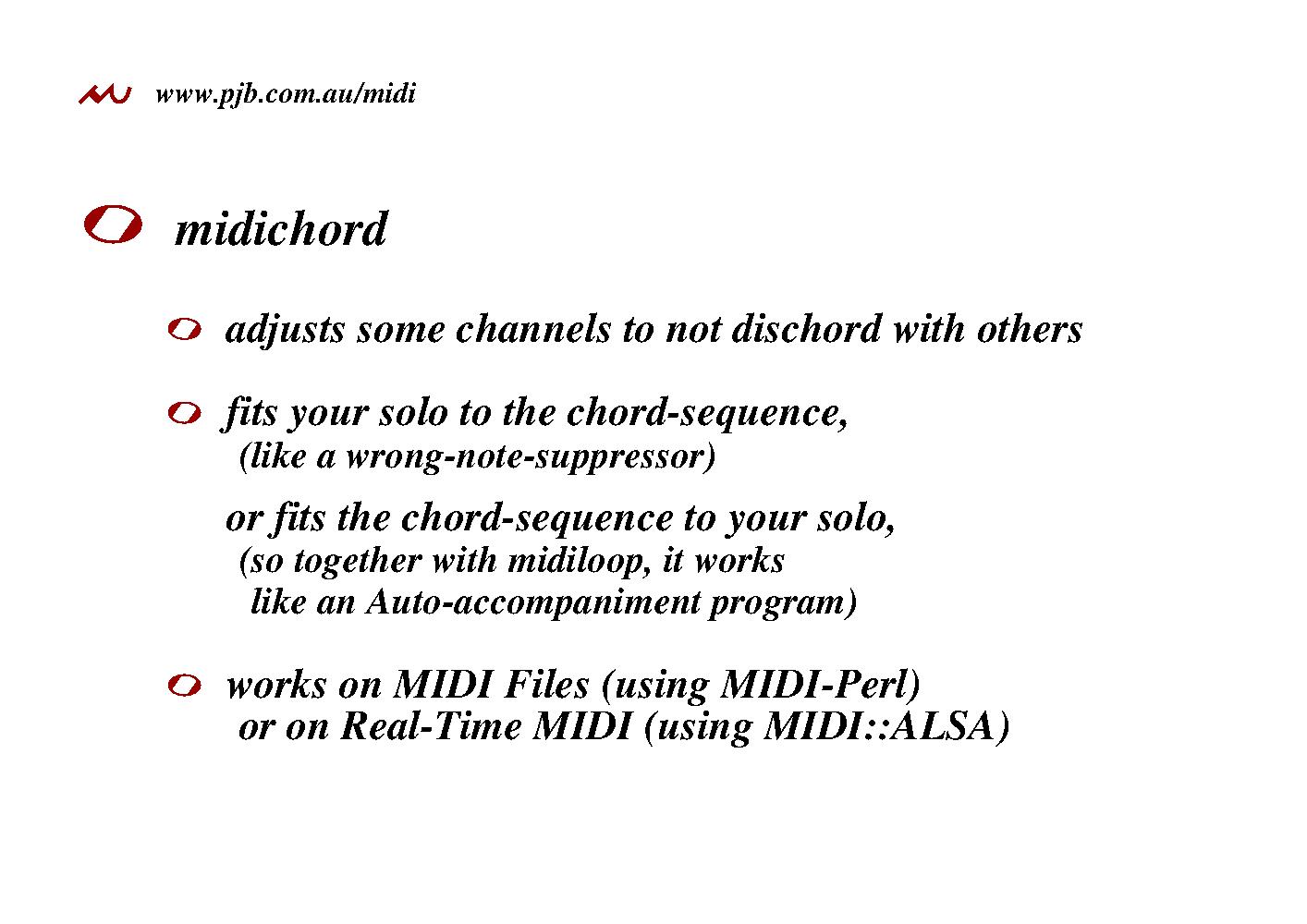

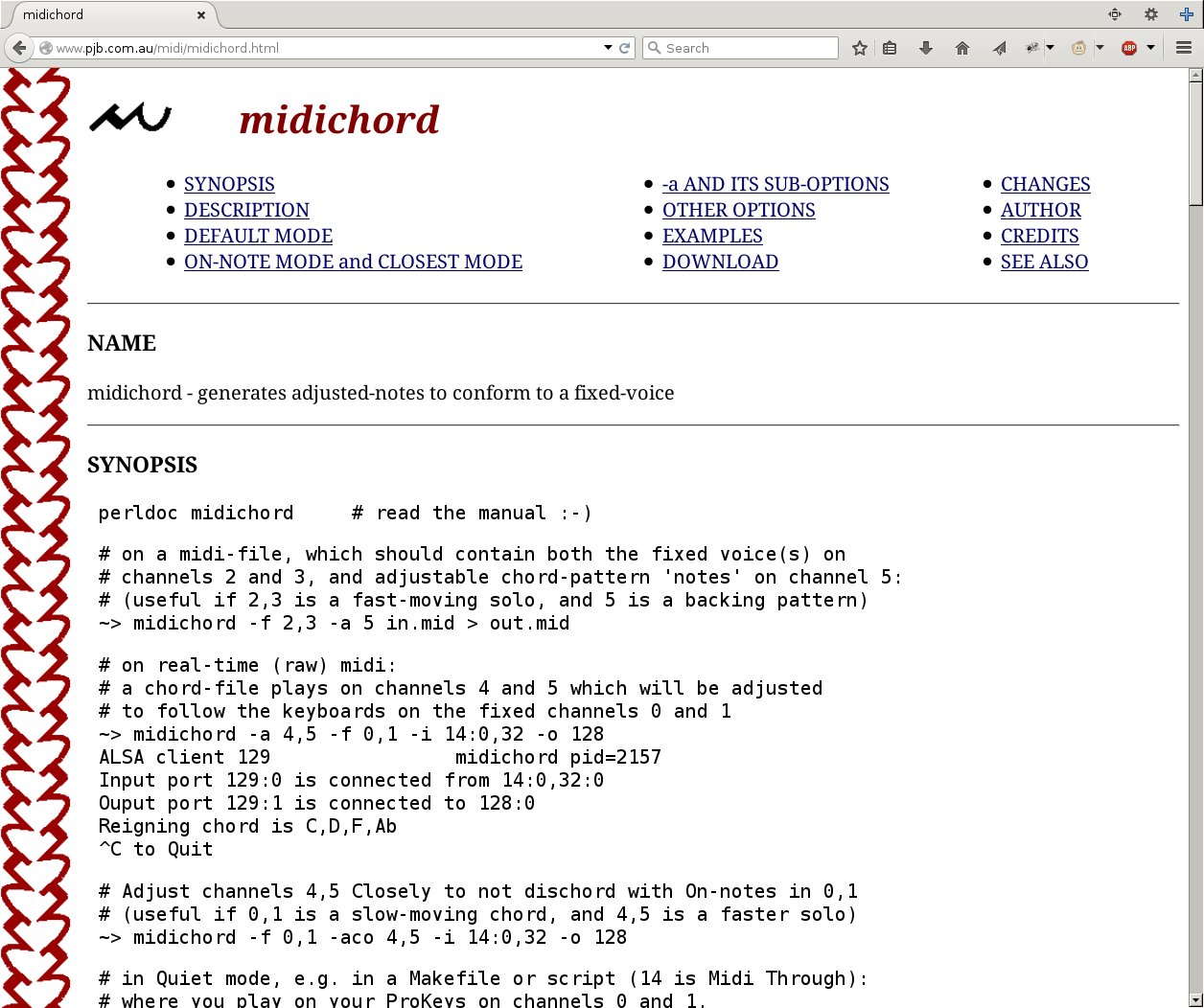

midichord

adjusts the pitches of the notes in some channels

(the -a option sets the adjust-channels)

to not dischord with the notes in other channels

(the -f option sets the fixed-channels).

midichord

adjusts the pitches of the notes in some channels

(the -a option sets the adjust-channels)

to not dischord with the notes in other channels

(the -f option sets the fixed-channels).

It work either on MIDI Files (using

MIDI-Perl),

or in Real-Time MIDI (using

MIDI::ALSA)

It work either on MIDI Files (using

MIDI-Perl),

or in Real-Time MIDI (using

MIDI::ALSA)

It can either fit your solo to the chord-sequence (like a wrong-note-suppressor), or fit the chord-sequence to your solo (like an Auto-accompaniment program).

The entire motivation for this talk was originally that I should give you, at this point, a self-indulgent live demo of midiloop and a MIDI-keyboard feeding through midichord to provide me with an Auto-accompaniment. Perhaps fortunately, there really isn't time for this, so we'll skip the demo.

This is a photo of my setup at home.

The lower keyboard is a M-Audio ProKeys Sono 88

which contains a little built-in synthesiser,

whose audio-output goes through the Zoom G3 effects-unit top left,

and then into the Yamaha HS5 speaker lower right.

This is a photo of my setup at home.

The lower keyboard is a M-Audio ProKeys Sono 88

which contains a little built-in synthesiser,

whose audio-output goes through the Zoom G3 effects-unit top left,

and then into the Yamaha HS5 speaker lower right.

The upper keyboard is a M-Audio Keystation 49 whose MIDI-output goes to the ASUS laptop top right whose job is to run midichord, midiloop and stuff like that.

Of the pedals, the one on the right is the wah-wah pedal that controls the Zoom G3, and the two on the left are just on-off switches that plug into the two keyboards and work, by default, as their sustain-pedals.

Manufacturers make the wrong things.

There's a need for open participation in hardware design,

and the photo is there to try to illustrate a couple of the needs:

Manufacturers make the wrong things.

There's a need for open participation in hardware design,

and the photo is there to try to illustrate a couple of the needs:

Keyboards need more pedal-sockets ! These ones have only one,

but there are about 60 relevant controllers on every channel !

It's what's called

"spoiling the ship for a halfpenny-worth of jack-sockets".

Church-organs have been stacking keyboards one behind the other for centuries, and the ergonomics are well known; you must not be forced to play far away from the body. Therefore, there should be no knobs obstructing the top surface of the lower keyboard (the ProKeys is relatively good, but there's still this one volume-control knob which gets in the way). Also, there should be better undercut at the front of the upper keyboard: preferably the same distance as the width of the flat surface of the lower.

There's a need for multiple-MIDI-pedal boards, say half-a-dozen pedals, with a good way of configuring each pedal for channel and controller. A good niche-manufacturing project . . .

Then, for people with the training to play bass-notes with their feet, we need affordable church-organ-style 30-note pedalboards. Another good niche-manufacturing project . . .

There's a need for better documentation of commercial gear. What's the System-Command to assign the pedal to a particular controller ? Or to set a keyboard to a particular channel ?

We could do this by organised lobbying of manufacturers, or perhaps even by 3D-printing . . .

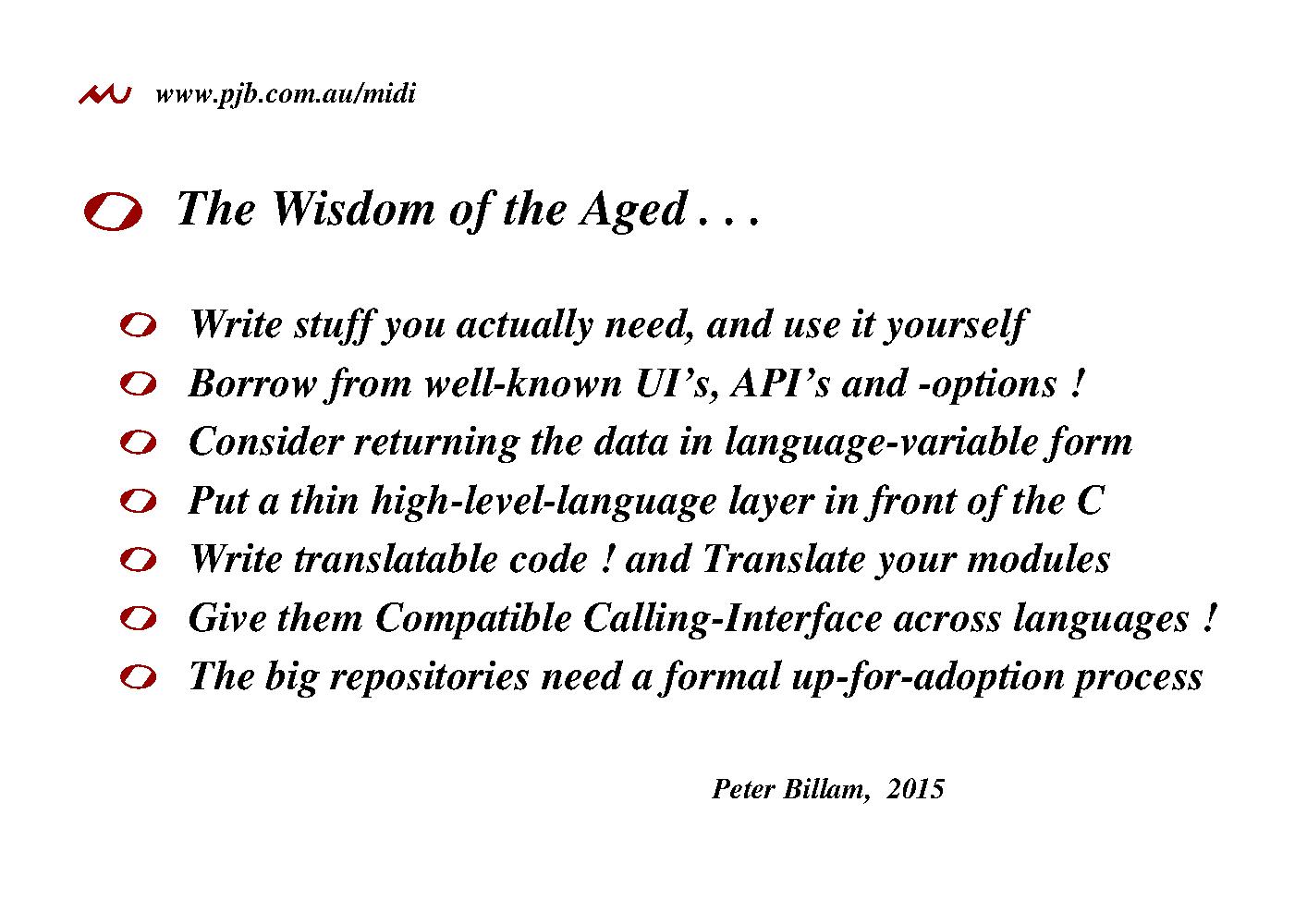

Here I reveal to you the Wisdom Of The Ages.

Here I reveal to you the Wisdom Of The Ages.

Write stuff you actually need, and use it yourself. This applies especially to Lone-Wolf developers.

Re-use well-known UI's, keystrokes, API's and command-line-options. My usual nightmare-example is mplayer versus cvlc versus xine; the speed-adjust keystrokes for example. Open-Source needs more of a UI-stability ethic . . .

If you're writing a module, try returning the data in language-variable form. So, if it's really a list, don't devise some home-brew class for it because then you'll have to re-implement push, pop, shift, unshift, slice, splice, reverse, map, grep, sort and all the other things users need to do with lists. If it's a list, return a list.

If you're writing a high-level language module that interfaces to a C-library, you can usually put in enough glue-code to expose your C-code directly to the calling application. Don't do that. Put a thin high-level-language layer in front of the C. That gets you a lot of flexibility for the future; for example you can easily check arguments and raise problems in a high-level-language-appropriate way. Glue-code does exist to do that, but it's hard to write and, especially, hard to translate.

Write translatable code ! and Translate your modules ! If what you've written is any use, it will outlast any particular fashionable language. I have code that's now in it's fifth language. Translatable code is clear code.

Give them Compatible Calling-Interfaces across languages ! Make it easier for module-users to translate their code.

The big module-repositories need a formal up-for-adoption process, like debian has. Otherwise they will rot . . .

See :

The video

www.youtube.com/watch?v=GnNdxzrt_jc of this talk

github.com/peterbillam

www.pjb.com.au/midi

en.wikipedia.org/wiki/MIDI

comp.music.midi